When using a device like the Tympan for hearing science, it is important to know the maximum loudness that can be produced by your system. If you cannot get loud enough, it can limit the range of hearing loss that you can work with. It is important to know how loud you can get. So, we measured the maximum sound pressure level that can be produced by the Tympan when used with a standard HDA 200 headset from Sennheiser. Let’s see what it can do!

GOAL

Our goal was to measure the maximum sound pressure level (SPL) that the Tympan can produce when driving an HDA200 headset without generating excessive harmonic distortion.

APPROACH

We measured the output of the HDA200 using an artificial ear. We had the Tympan generating test tones at increasing loudness until either (1) we exceeded our self-imposed limit on harmonic distortion or (2) the Tympan could go no louder.

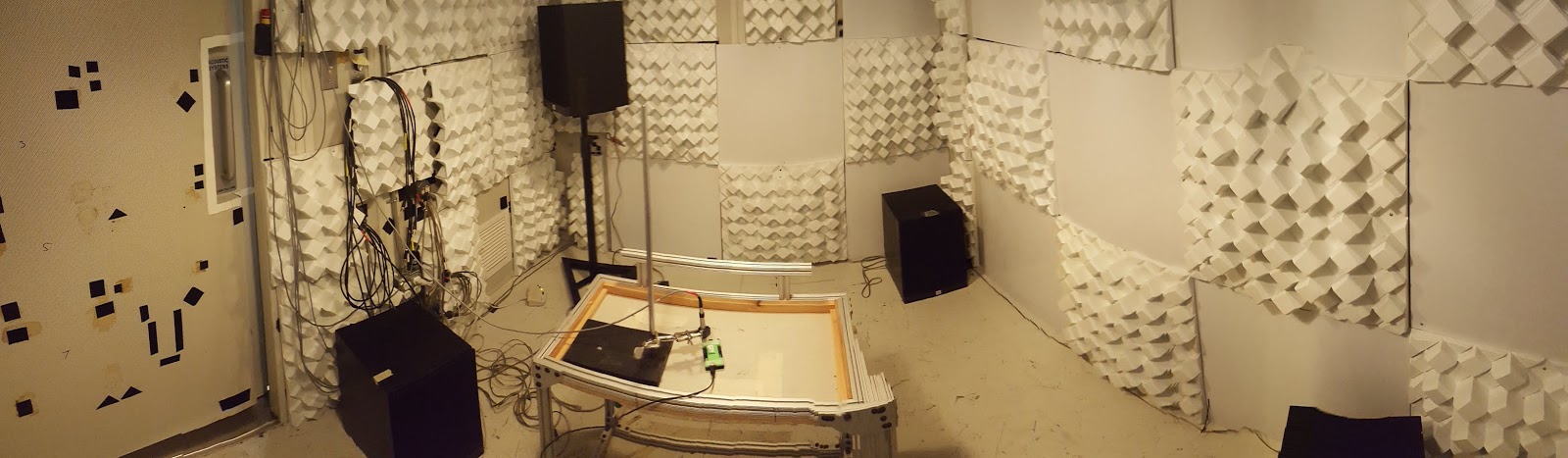

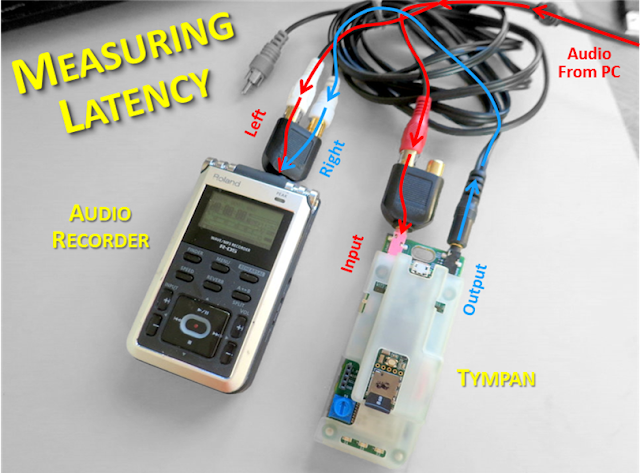

TEST SETUP

In addition to the Tympan Rev D and our HDA 200 headset, we used a Bruel & Kjaer Artificial Ear (4153) along with a Bruel & Kjaer microphone (4192). We used a LabVIEW data acquisition system to record the electrical signal produced by the Tympan and the microphone signal produced by the 4192 from the artificial ear.

TYMPAN FIRMWARE

We programmed the Tympan to produce sine test tones at different frequencies and amplitudes. The Tympan program is on our GitHub here

LABVIEW SOFTWARE

We used a laboratory-grade data acquisition system from National Instruments along with a LabView program to record our data. We recorded the microphone signal from the artificial ear and, per ANSI-ASA-S3.6-2018 (Section 6.1.5), we also recorded the electrical signal produced by the Tympan itself. All data were recorded as WAV files at a sample rate of 96 kHz.

COLLECTING DATA

After placing the headset on the artificial ear, we would command the Tympan to play a steady tone at the desired frequency. We would record the output of the Tympan and of the artificial ear. We would then increment the amplitude and/or frequency and repeat the recording. All data were post-processed using these Matlab scripts.

DISTORTION LIMITS

For this test, we define the maximum sound pressure level (SPL) to be the highest level produced by the headset when the harmonic distortion is still below our limits. Using ANSI-ASA-S3.6-2018 (Section 3.1.5) as a reference, we set our distortion limits to be:

- No more than 2.5% total harmonic distortion

- No more than 2.0% distortion for the second and third harmonic

- No more than 0.3% distortion for the fourth and each higher harmonic

Continuing to take inspiration from S3.6-2018, we assessed the harmonic distortion using the microphone signal for the tones below 6 kHz while, for the tones at 6 kHz and above, we assessed the harmonic distortion using the electrical signal recorded directly from the Tympan.

RESULTS: Sound Pressure Level

The table and figure below show the maximum SPL that we achieved at each test frequency while staying within the distortion limits defined above.

RESULTS: Hearing Level

In addition to reporting the maximum values as sound pressure level (SPL), it is often desired to know the values expressed as “Hearing Level” (HL). HL can be computed from SPL given the “reference equivalent threshold sound pressure level” (RETSPL) that is provided by the headset manufacturer. The RETSPL values for the Sennheiser HDA are published and were included in the table above. As a result, the maximum HL that can be produced by the HDA 200 as driven by the Tympan are shown in the table and in the figure below.

DISCUSSION

Overall output level.For the commonly used audiogram frequency range (125–8000 Hz), the Tympan with the HDA200 can produce up to 108–115 dB SPL (or 79–105 dB HL), depending upon the specific frequency of interest.

Decreasing SPL at Higher FrequenciesIn the extended high frequency range (> 8kHz), we see that the maximum SPL drops modestly as frequency increases. This modest drop is due to the frequency response of the HDA200 headset; the voltage/current provided by the Tympan in this frequency range is consistent with the lower frequencies.

Decreasing HL at Higher Frequencies. Of course, when looking at the HL values at the extended high frequencies (> 8 kHz), the maximum HL values drop dramatically, but this result is due to the lower sensitivity of human hearing at these frequencies and is not related to the performance of the headset or of the Tympan.

Potential Level with Other Headsets. This testing only evaluated the maximum level of the Tympan when used with the HDA200. If the Tympan is used with other headsets, different maximum level values should be expected. For example, we tested the Tympan with consumer earbuds (see here) and saw loudness values of 105-117 dB SPL at 1 kHz, depending upon one's distortion criteria.

Tympan’s Electrical Output. The Tympan provides an electrical signal to drive the headset. It is the headset that converts the electrical signal into acoustic level. Regardless of frequency, the Tympan’s hardware is designed to deliver a clean output up to an amplitude of approximately 1 Vrms into a load of at least 32 ohms. So, if you need more loudness than seen here with the HDA200, you can easily try your own favorite headset or earphones!